As artificial intelligence (AI) continues to advance, the need to efficiently manage and optimize its deployment in real-world scenarios becomes increasingly crucial.

In this post, I’ll discuss three key areas of AI operations: AIOps, MLOps, and the emerging LLMOps. I’ll explain each concept, highlight their key differences, and explore how they intersect.

AIOps: Enhancing IT Operations with AI

What is AIOps?

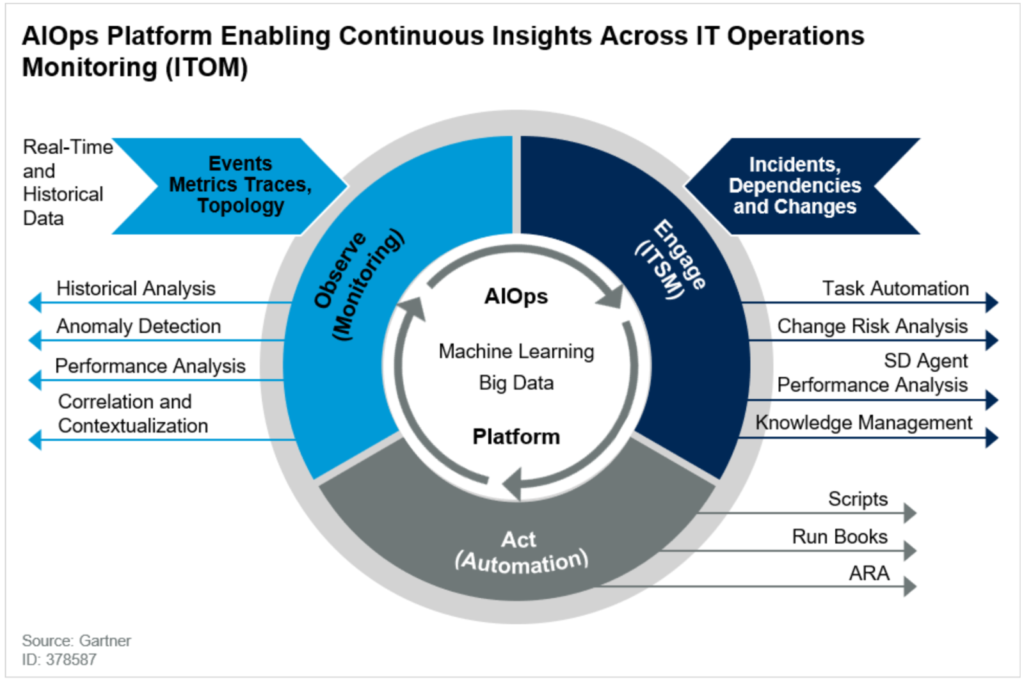

AIOps, or artificial intelligence for IT operations, leverages AI and machine learning to improve and automate IT processes. As businesses grow more dependent on digital infrastructure, managing complex IT environments manually has become impractical. AIOps automates repetitive tasks, identifies potential issues before they become problems, and optimizes IT performance in real-time.

The core objective of AIOps is to ensure that IT systems run efficiently with minimal human intervention. It automates tasks typically handled by IT teams, such as anomaly detection, root cause analysis, and incident management.

Core Components of AIOps

AIOps involves several essential components:

Data Collection: Continuously gathering data from various IT sources, including logs, metrics, and events.

Monitoring: Real-time monitoring of IT infrastructure to detect anomalies and performance issues.

Analysis: Applying AI and machine learning to analyze data, identify patterns, and predict problems.

Automation: Automating tasks like patch management, incident response, and resource allocation.

AIOps Tools for Automation and Analytics

Popular AIOps tools include platforms like Splunk, Moogsoft, and Dynatrace. These tools provide the analytics and automation needed to keep IT operations running smoothly, freeing organizations to focus on innovation rather than problem-solving.

Real-World Applications of AIOps

AIOps is widely used across industries. In finance, for example, it ensures that trading platforms remain secure and operational, reducing the risk of costly downtime. In telecommunications, AIOps helps maintain network stability and optimize bandwidth usage to provide seamless customer service.

By automating the detection and resolution of IT issues, AIOps lightens the load on IT teams, allowing them to focus on strategic initiatives and improving operational efficiency.

MLOps: Streamlining the Machine Learning Lifecycle

What is MLOps?

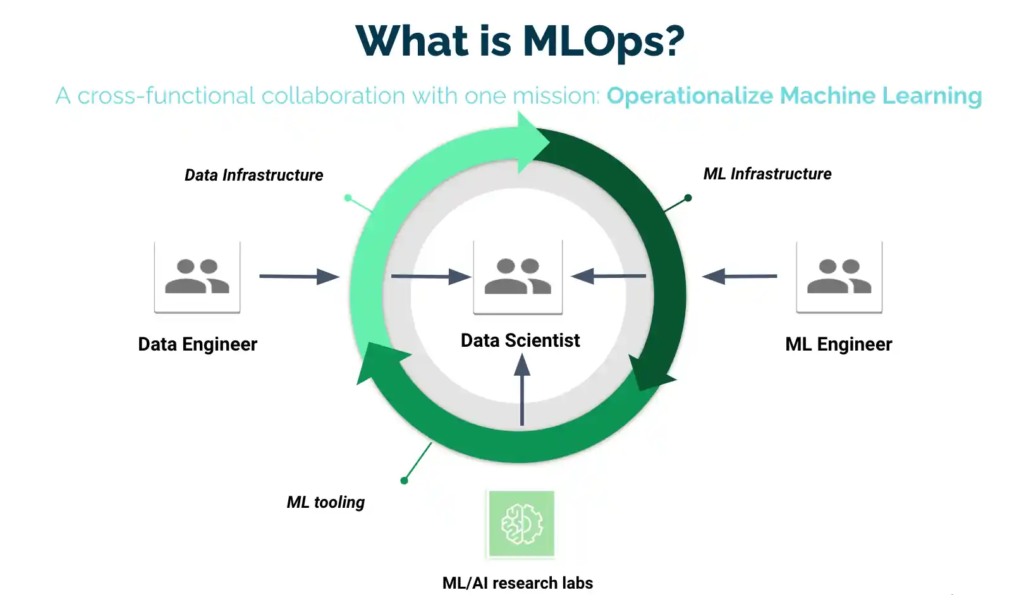

MLOps, or machine learning operations, is a set of practices and tools that manage the lifecycle of machine learning models. While data scientists develop models, MLOps ensures they are scalable, reliable, and ready for deployment in production environments.

MLOps is essential for organizations that use machine learning to drive business decisions. It provides a framework for handling the complexities of deploying, monitoring, and maintaining machine learning models at scale, ensuring they continue to deliver accurate results over time.

How MLOps Differs from AIOps

While AIOps focuses on optimizing IT infrastructure, MLOps addresses the challenges of deploying and maintaining machine learning models. These challenges include data versioning, model retraining to prevent drift, and integrating models into CI/CD (continuous integration/continuous deployment) pipelines.

MLOps ensures that machine learning models are continuously monitored and updated as new data emerges and business needs evolve. This requires a combination of data engineering, model management, and DevOps practices, bridging the gap between data science and IT operations.

MLOps Best Practices

Key MLOps best practices include:

Versioning: Tracking data, models, and code versions for reproducibility and traceability.

Automation: Streamlining model deployment with automated CI/CD pipelines.

Monitoring: Continuously monitoring model performance to detect drift and bias.

By implementing these best practices, organizations can ensure their machine learning models remain effective and aligned with evolving business objectives.

Tools for MLOps

Tools like TensorFlow Extended (TFX), Kubeflow, and MLflow are commonly used for MLOps. They help manage the entire machine learning lifecycle, from data ingestion and model training to deployment and monitoring.

LLMOps: Managing Large Language Models

What is LLMOps?

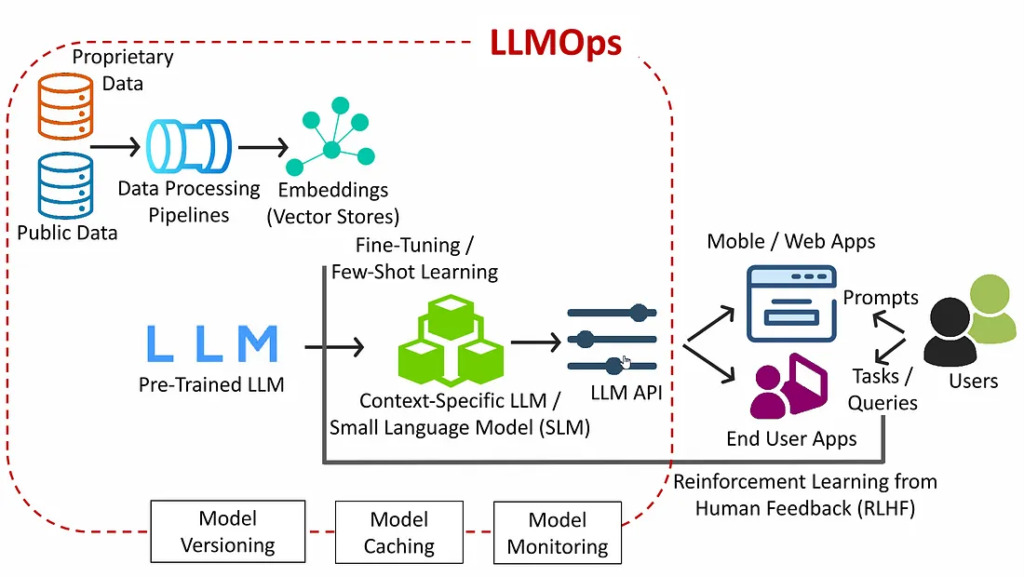

With the rise of large language models (LLMs) like GPT, Claude, and LLaMa, organizations need dedicated operational practices to manage them. LLMOps, or large language model operations, is a specialized branch of MLOps focused on the unique challenges associated with deploying and maintaining LLMs.

LLMOps deals with the complexities of managing LLMs, which are significantly larger and more complex than traditional models. These models require specialized tools to ensure they are fine-tuned, chained together for complex tasks, and monitored in real time.

Challenges Unique to LLMOps

LLMOps introduces several unique challenges, such as:

Prompt Engineering: Crafting and managing prompts to guide models in producing relevant outputs.

LLM Chaining: Combining multiple model calls in a sequence to handle complex tasks.

Real-Time Observability: Monitoring performance to detect issues like drift, bias, or degradation.

These challenges necessitate a tailored approach to managing LLMs, making LLMOps a vital practice for organizations utilizing these models.

Tools for LLMOps

Specialised tools like LangChain and Weaviate are designed for LLMOps. They assist with prompt engineering, LLM chaining, and retrieval-augmented generation (RAG). Weights & Biases (W&B) also offers tracking and visualisation tools for LLM training, fine-tuning, and performance monitoring.

Comparing AIOps, MLOps, and LLMOps

While AIOps focuses on automating IT operations, MLOps is dedicated to the machine learning model lifecycle, and LLMOps tackles the specific challenges of large language models. LLMOps can be seen as an extension of MLOps, with new tools and practices designed to meet the unique demands of LLMs.

When to Use AIOps, MLOps, and LLMOps

AIOps is best for optimizing IT infrastructure and ensuring consistent system performance.

MLOps is essential for managing the lifecycle of machine learning models, from development to deployment and monitoring.

LLMOps is critical for organizations working with large language models, ensuring proper handling of their complexity.

Although each area serves a distinct purpose, they share common practices like continuous monitoring and performance optimisation, aiming for efficiency and scalability.

The Future of AI Operations

As AI continues to evolve, so will the operational practices that support it. While AIOps, MLOps, and LLMOps are distinct today, they may converge as tools and techniques advance. Staying ahead by adopting the latest AI operational practices will position organizations to fully harness the potential of AI in the future.