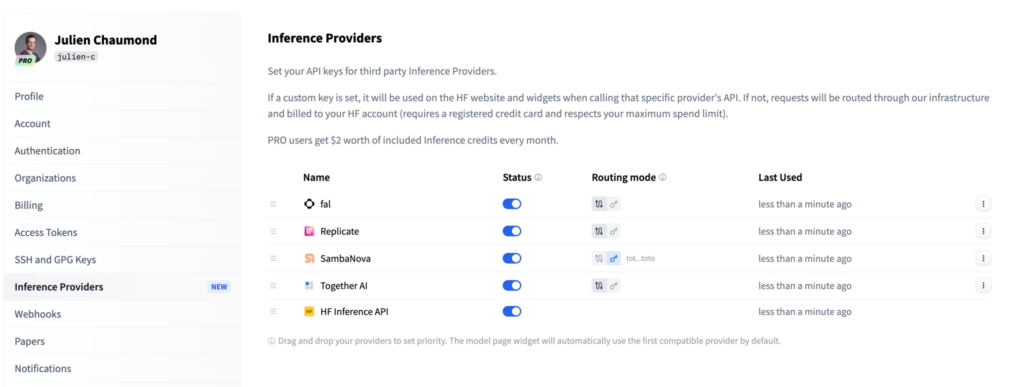

AI development platform Hugging Face has launched “Inference Providers,” a new feature that simplifies the process of running AI models on third-party cloud infrastructure. This collaboration with cloud vendors like SambaNova, Fal, Replicate, and Together AI allows Hugging Face developers to easily deploy and scale their models using partner services.

Previously, Hugging Face primarily focused on its own in-house solution for running models. However, the company has now shifted its focus towards collaboration, storage, and model distribution. By partnering with leading cloud providers, Hugging Face aims to provide developers with seamless and unified access to serverless inference capabilities.

Serverless inference, offered by partners like SambaNova, automates the management of underlying hardware, allowing developers to easily deploy and scale their models without manual configuration. Hugging Face developers can now easily spin up models on partner servers directly from the Hugging Face platform with just a few clicks.

Hugging Face emphasizes that this collaboration benefits developers by providing them with flexibility and choice in selecting the most suitable cloud infrastructure for their needs. Pricing for using third-party cloud providers will be based on standard provider API rates, with Hugging Face potentially exploring revenue-sharing agreements with partners in the future.

Founded in 2016, Hugging Face has emerged as a leading AI model hosting and development platform, having raised close to $400 million in funding from prominent investors. The company claims to be profitable.